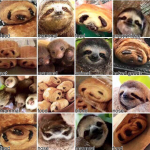

Trying to confuse Google’s Vision algorithms with dogs and muffins

When I saw this set of very similar pictures of dogs and muffins (which comes from @teenybiscuit‘s tweets), I had only one question: How would Google’s Cloud Vision API perform on this.

At a quick glance, it’s not obvious for a human, so how does the machine perform? It turns out it does pretty well, check the results in this gallery:

(also find the album on imgur)

For almost each set, there is one tile that is completely wrong, but the rest is at least in the good category. Overall, I am really surprised how well it performs.

You can try it yourself online with your own images here, and of course find the code on GitHub.

Technically it is built entirely in the browser, there is no server side component except the what’s behind the API of course:

- Images are loaded from presets or via the browser’s File API.

- Each tile is converted in its own image, and converted to base 64.

- All of this is sent at once to the Google Cloud Vision API, asking for label detection results (this is what matters to us here, even if the API can do much more like face detection, OCR, landmark detection…)

- Only the label with the highest score is kept from the results and printed back into the main canvas.