Deepwater: Deep-learning based enhancer for underwater pictures

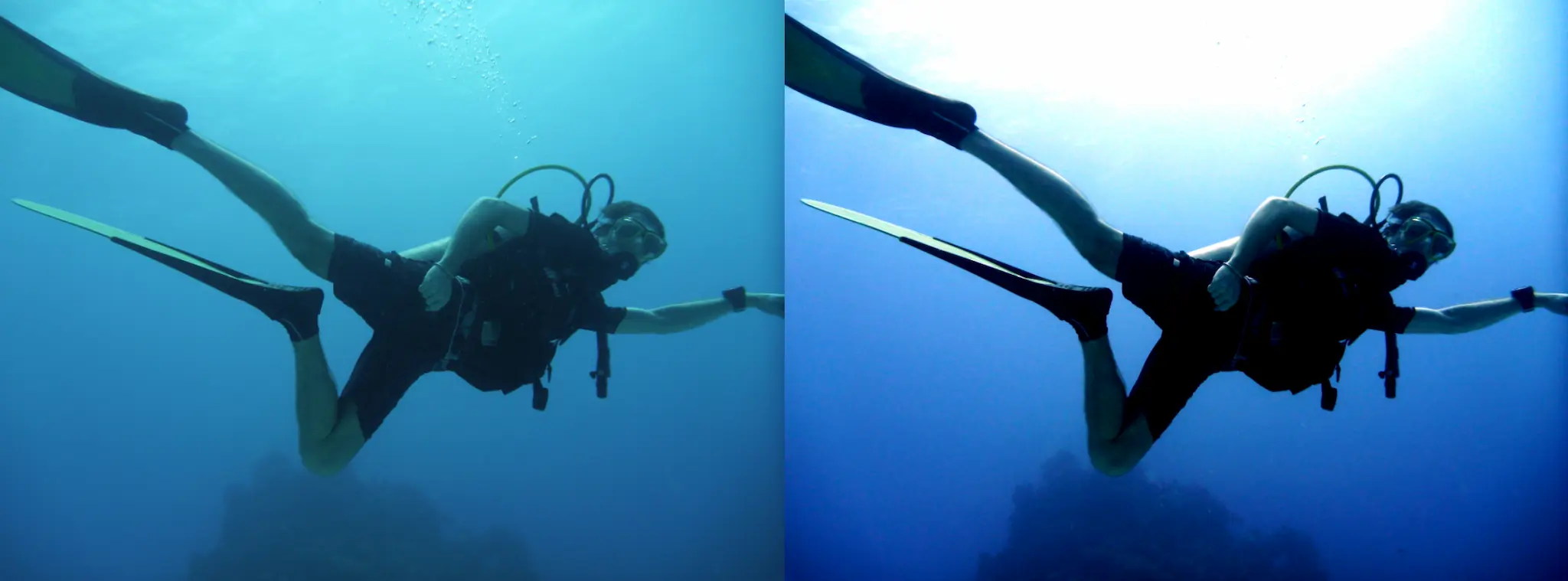

My partner and I are scuba divers and like to snap underwater pictures. Sadly, if we do not use any red filter underwater, the pictures always need manual color correction to look OK.

The "auto" adjustment of most photo softwares like Google Photos improves things a bit, but manual color retouching is needed for best results.

So we asked ourselves: could we train an ML model to automate this? That's how we started to work on a deep-learning based enhancer for underwater pictures.

Training dataset

To generate our training dataset, we used perfect underwater pictures (e.g. from National Geographic) that we deteriorated to look like pictures that would have been taken with our camera (low contrast, too blue, underexposed...). We used data augmentation to generate many variations of these (rotation, more or less color degradation...)

Convolutional neural network

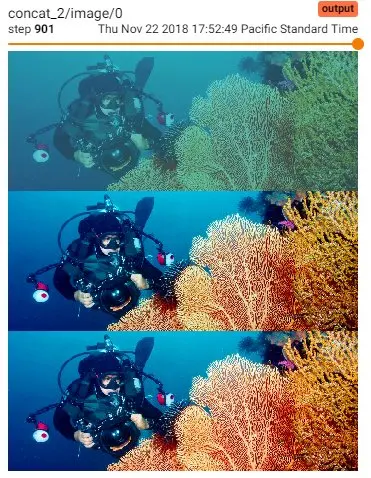

Our first approach that led to promising results was to use a convolutional neural network:

Not bad at all! The problem with this approach is that the result loses its sharpness. This is due to the fact that this is an "image to image" model.

Fully connected neural network

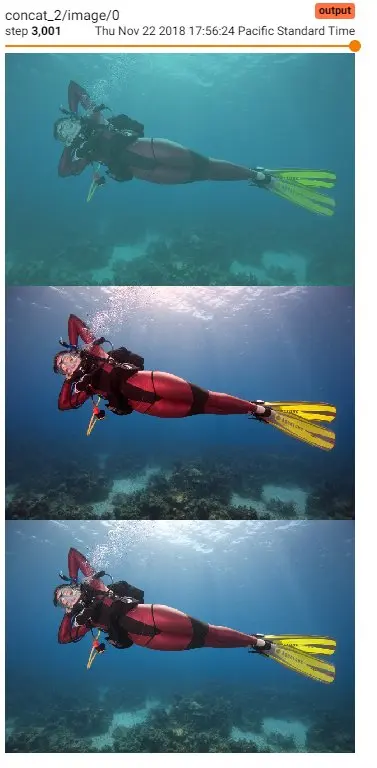

Our second approach was a fully connected neural network that outputs just a few image manipulation parameters (contrast, brightness, saturation...). These parameters are then applied to the original image. By design, this technique removed the sharpness issue. After adding a bunch of layers, we had very good results on our training and eval datasets, but not when testing with real pictures.

The problem is that real life pictures (like the one above) are too different from what we trained or evaluated on: the model does its job well on what it was trained on. But the generated training dataset isn't really representative of pictures we usually take underwater. So the next step would be to go back to gathering/generating better training data, probably using a bit less less data augmentation and using out own corrected pictures (instead of working backward by deteriorating great pictures).

Find the source code on GitHub

Web app

We were able to load and execute our model client-side on a webpage with TensorFlowJS.

The web app is hosted on Firebase Hosting, re-deployed automatically when we push to the GitHub repo with a Google Cloud Build trigger.

The training code is in Python, and outputs a "SavedModel". Using a tool named tensorflowjs_converter, we coverted the model into a format that can be hosted and loaded by TensorFlowJS.

The model is stored in a public Cloud Storage bucket, as it is quite bit to be in git.

See source code of the webapp on GitHub

Try the web app at deepwater-project.web.app.